Guys I might be an e/acc

I read If Anyone Builds It, Everyone Dies (IABIED) and nodded along like everyone else, mostly agreeing with the argument but having minor quibbles about the details or the approach. However, I was recently thinking, “how in support of an AI pause am I, actually?” The authors of IABIED were pretty convincing, but I also know I have different estimates of AI timelines and p(doom) than the authors do. Given my own estimates, what should my view on an AI pause be?

I decided to do some rough napkin math to find out.

A (current number of deaths per year): 60 million

B (guess for years until AGI, no pause): 40 years

C (pause duration, let’s say): 10 years

D (years until AGI, given a pause): B + C = 50 years

E (guess for p(doom), given no pause): 10%

F (guess p(doom) given a pause): 5%

G (current world population, about): 8 billion

H (deaths before AGI, given no pause): A * B = 2.4 billion

I (expected deaths from doom, given no pause): E * G = 800 million

J (total expected deaths, given no pause): H + I = 3.2 billion

K (deaths before AGI, given a pause): A * D = 3 billion

M (expected deaths from doom, given a pause): F * D = 400 million

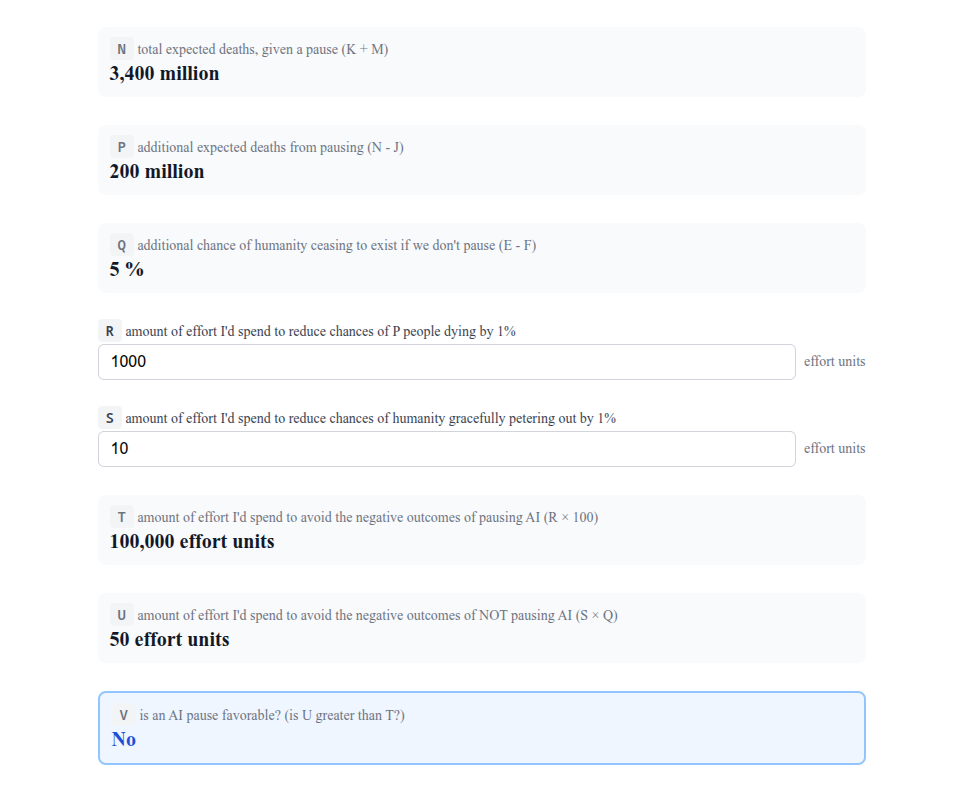

N (total expected deaths, given a pause): K + M = 3.4 billion

P (additional expected deaths from pausing): N - J = 200 million

Q (additional chance of humanity ceasing to exist if we don’t pause): E - F = 5%

If we pause AI, then based on my estimates, we’ll see an extra 200 million deaths, and the chances of humanity ceasing to exist is halved from 10% to 5%. Is that worth it? That depends on your values.

Let’s say I found out 200 million people were going to die from some kind of meteor strike or something. What lengths would I go to in order to convince people of this? I think the project would become my life. I would be writing essays, calling the news, calling the president – screaming from the mountaintop. If I thought my actions could even reduce the chances of this happening by 1%, I would do it.

Now let’s say I found out in 200 years, humanity was going to end. For some complex reason, humanity would become infertile in 200 years, and humanity would end. Some kind of quirk in our DNA, or some kind of super virus. Or, let’s say they just start having robot babies instead of human babies, because they’re somehow more appealing, or human embryos are secretly replaced by robot embryos by some evil cabal. Anyway, it’s a situation we can only prevent if we start very soon. But let’s say I knew the humans at the end of humanity would be happy. Their non-conscious robots babies acted just like human babies, seemed to grow into people, etc. But once the final human died, the robots would power down and the universe would go dark. I’m trying to create a hypothetical where we have to consider the actual value of humanity having a future irrespective of the suffering and death that would normally accompany humanity coming to an end. Let’s say I’m convinced this is going to happen, and thought if I dedicated my life to stopping this, I could reduce the chances of it happening by 1%. Would I do it?

No way. Maybe the pure ego of being the first person to discover this fact would drive me to write a few essays about it, or even a book. But the eventual extinguishment of humanity just wouldn’t be important enough for me to dedicate my life to. It’s not that I don’t care at all, I just mostly don’t care.

For me, I have empathy and therefore want people who exist (or who will exist) to be happy, not suffer, and stay alive. I don’t care about tiling the universe with happy humans. When people place some insanely high value on humanity existing millions of years into the future, that seems to me to be the output of some funny logical process, rather than an expression of one’s actual internal values.

Let’s do some more napkin math and see how this relates to an AI pause.

R (amount of effort I’d spend to reduce chances of P people dying by 1%): 1000 arbitrary effort units

S (amount of effort I’d spend to reduce chances of humanity gracefully petering out by 1%): 10 arbitrary effort units

T (amount of effort I’d spend to avoid the negative outcomes of pausing AI): R * 100 = 100,000 arbitrary effort units

U (amount of effort I’d spend to avoid the negative outcomes of NOT pausing AI): S * Q = 50 arbitrary effort units

V (is an AI pause favorable?): is U greater than T? Nope.

So I do not favor an AI pause, according to this math. But I wouldn’t really say I’m “for” or “against” a pause, because my position isn’t confident enough to take a strong position. The math inherits the natural uncertainty in my underlying guesses, and also made a lot of assumptions. The idea was just to put down on paper what I believe and see roughly what the natural consequences of those beliefs might be, rather than just passively absorbing an attitude toward AI from my environment.

There were plenty of assumptions here to simplify things, including: I assumed the population won’t increase, that the number of deaths per year will be relatively constant until AGI, that the AGI pause duration will be 10 years, that capabilities won’t increase during the pause at all (even theoretical research), that AI kills everyone instantly or not at all, and I didn’t really factor in suffering directly, just used death as a proxy.

There may also be factors you think are important I didn’t include, like the inherent value of non-human (AI) life, the inherent value of animal life/suffering, etc. So feel free to create your own version.

Whether or not you favor a pause might come down to how much you value the lasting future of humanity. Or if you have IABIED-like timelines and p(doom), then there may be a clear case for a pause even in terms of human lives.

I had Claude create a calculator version of my napkin math, so you can try entering your own assumptions into the calculator to see whether you’d be for or against an AI pause. Try it here. (You should choose a negative R value if P is negative!)